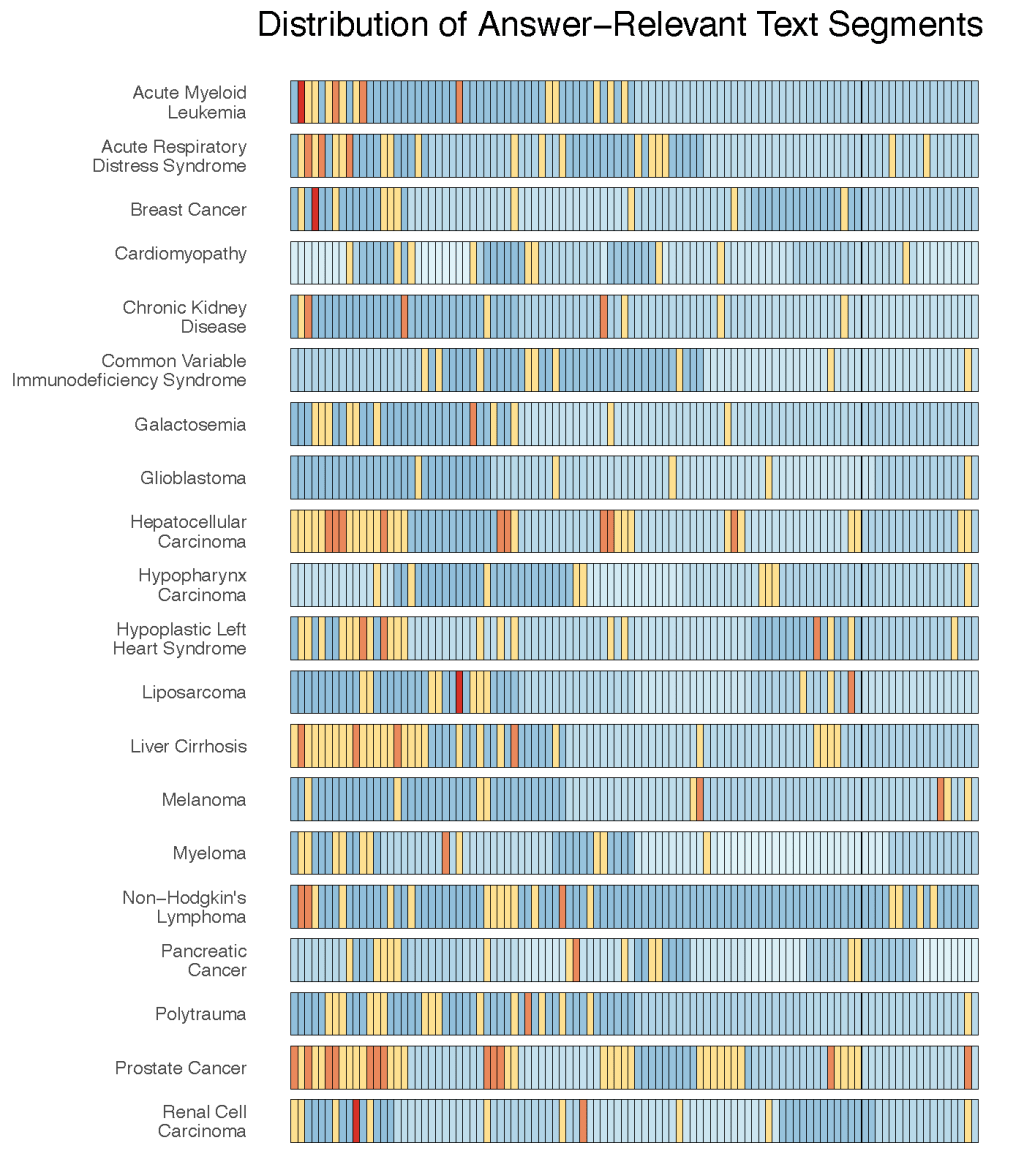

In this preprint, our team introduces the LongHealth benchmark, a novel dataset designed to assess the performance of large language models (LLMs) in extracting information from lengthy, real-world clinical documents. The benchmark consists of 20 fictional patient cases, each containing 5,090 to 6,754 words, covering a wide range of diseases. We challenge LLMs with 400 multiple-choice questions that test their ability to extract information, handle negation, and sort events in the correct order.

We evaluated nine open-source LLMs and the proprietary GPT-3.5 Turbo on the LongHealth benchmark. Our findings, available on arXiv (https://arxiv.org/abs/2401.14490) and GitHub (https://github.com/kbressem/LongHealth), reveal that while some models, such as Mixtral-8x7B-Instruct-v0.1, perform well in extracting information from single and multiple patient documents, all models struggle significantly when asked to identify missing information.

Our work highlights the potential of LLMs in processing lengthy clinical documents, but also underscores the need for further refinement before they can be reliably used in clinical settings. The LongHealth benchmark provides a more realistic assessment of LLMs‘ capabilities in healthcare, paving the way for the development of more robust models that can safely and effectively handle the complexities of real-world clinical data.