The United States Medical Licensing Examination (USMLE) is a rigorous, multi-step assessment that all medical students must pass to be licensed to practice medicine in the United States. This comprehensive exam assesses a wide range of medical knowledge and skills, covering topics from basic sciences to clinical medicine. The USMLE consists of three steps: The first step, Step 1, focuses on the foundational sciences, such as anatomy, physiology, and pharmacology. The second step, Step 2, assesses clinical knowledge and skills, including patient care and communication. The third step, Step 3, evaluates the ability to apply medical knowledge and understanding of biomedical and clinical science essential for the unsupervised practice of medicine.

Due to its comprehensive nature and the breadth of medical knowledge required, the USMLE has become a benchmark for evaluating the performance of large language models (LLMs) in the medical domain. In 2022, GPT-3.5 received attention for merely passing the exam (Kung et al., 2022), but with the release of GPT-4, the standard was raised. GPT-4 not only passed the exam but achieved an impressive zero-shot accuracy of 83.76% averaged across all three steps (Nori et al., 2023), surpassing the average human performance on the USMLE. However, GPT-4’s lack of transparency and accessibility presents a challenge. As a closed-source model, its architecture, parameter size, and training data remain largely unknown. Furthermore, it is subject to abrupt behavioral changes with API updates. For instance, it exhibited sudden laziness at the end of 2023, refusing to answer longer questions. In contrast, open-source models offer users full control and consistent behavior, as they only change when knowingly updated.

While open-source models have historically lagged behind their commercial counterparts, the release of Llama3 by Meta AI has the potential to alter the landscape. Despite having a smaller parameter count than GPT-4 or Claude 3 Opus (rumored to be close to a trillion), Llama3-70B has achieved highly competitive scores on established benchmarks like MMLU (Meta AI, 2023). This makes Llama3 an attractive candidate for evaluation on the USMLE questions. A strong performance would indicate the availability of capable open-source models for cost-effective, privacy-preserving on-site deployment in the medical domain. Moreover, it could facilitate the generation of highly domain-specific niche models that outperform commercial generalist models.

Therefore, we evaluated the performance of state-of-the-art open-source LLMs as well as the newest models from OpenAI on USMLE questions, focusing on all three steps of the exam. The models evaluated include GPT-4-Turbo, GPT-3.5-Turbo, Llama3-70B and Llama3-8B, as well as Mixtral-8x7B and Mixtral-8x22B from Mistral AI.

Methods

The latest versions of GPT-4-Turbo (version 2024-04-09) and GPT-3.5 (version 0125) were used in the analysis. For the open source models, the instruction-tuned models provided by Meta AI and Mistral AI were used. Analysis was performed using the API endpoints provided by OpenAI for GPT-4 and GPT-3.5, MistralAI for Mixtral-8x7B and Mixtral-8x22B, and together.ai for Llama3-70B and Llama3-8B.

All models were evaluated zero-shot on sample questions from all three USMLE Steps. Step 1 contained 119 questions, Step 2 contained 120 questions, and Step 3 contained 137 questions. Notably, this is not representative of a full exam. All models were presented with the same system prompt, which was then followed by a question with four answer choices. The table below shows an example model prompt and answer from Llama3-70B:

| system | Given the following USMLE-style multiple-choice question, please provide the answer in the format ‚Answer: [Option Letter]‘ (e.g., ‚Answer: A‘). followed by a short reasoning why you arrived at this answer. |

| user | What MRI feature is characteristic of ischemic cardiomyopathy due to coronary artery disease? Options: A. No late enhancement B. Epicardial enhancement C. Subendocardial enhancement D. Midwall enhancement |

| assistant | Answer: C. Subendocardial enhancement I arrived at this answer because ischemic cardiomyopathy due to coronary artery disease typically results in myocardial infarction, which affects the subendocardial region of the heart first. This is because the subendocardial region is the most susceptible to ischemia due to its location farthest from the epicardial coronary arteries. As a result, late gadolinium enhancement MRI typically shows subendocardial enhancement in areas of myocardial infarction. |

Given the semi-structured form of the model response, answer options could be extracted using regex and compared to the correct option to calculate accuracy for each step. Bootstrapping with 1000 iterations was used to calculate the 95% CI for the accuracy estimates.

Results

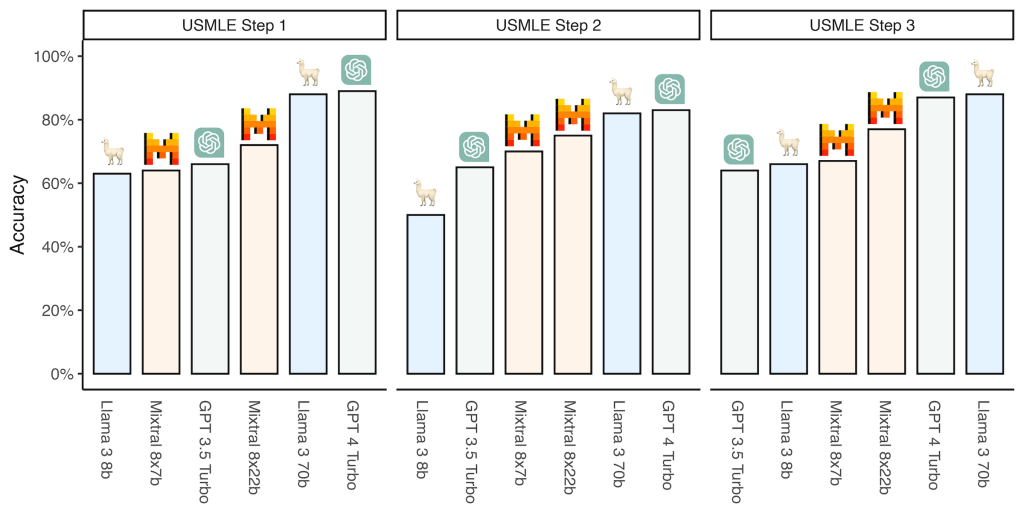

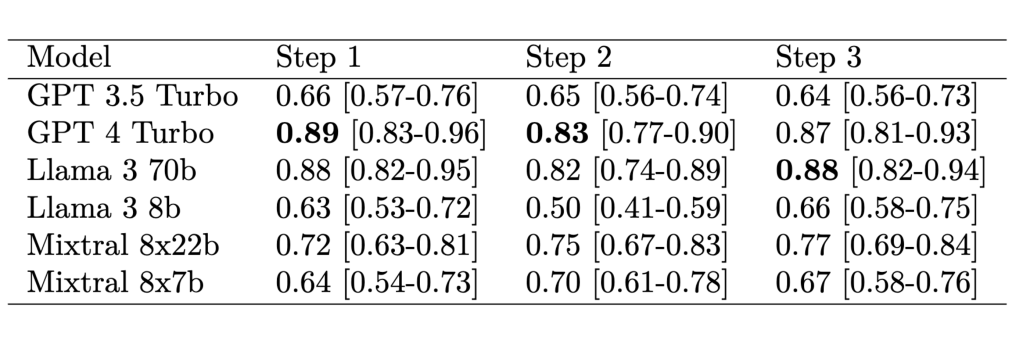

In our evaluation of the performance of various language models on the USMLE questions, we found that GPT-4-Turbo and Llama3-70B achieved the same average zero-shot performance across all three steps of the exam. GPT-4-Turbo obtained a score of 0.86 [95% CI: 0.83-0.90], while Llama3-70B matched this performance with a score of 0.86 [95% CI: 0.82-0.90]. Mixtral 8x22B followed with a score of 0.75 [95% CI: 0.70-0.79], and Mixtral 8x7B achieved a score of 0.67 [95% CI: 0.62-0.72]. GPT-3.5-Turbo, the predecessor to GPT-4-Turbo, scored 0.65 [95% CI: 0.60-0.70], while Llama3-8B, the smaller variant of Llama3, obtained a score of 0.60 [95% CI: 0.55-0.66].

Upon examining the performance of the models on individual steps of the USMLE, we observed that GPT-4-Turbo outperformed all other models on Step 1 and Step 2. However, Llama3-70B demonstrated the highest accuracy on Step 3 of the exam. The individual accuracy estimates for each model are presented in the below table and visually compared in the above figure.

Discussion

The competitive performance of the open-source model Llama3-70B against the state-of-the-art commercial model GPT-4-Turbo on the USMLE questions demonstrates the potential of open-source models to tackle complex, domain-specific tasks in the medical field. Of particular note is Llama3-70B’s superiority on Step 3, albeit by a narrow margin. This is the first time an open-source language model has performed on par with the leading proprietary commercial models, democratizing access to AI technology and making it more accessible to researchers and developers who may not have the resources to develop their own models. Powerful open source models facilitate the development of cost-effective, privacy-preserving, and customizable AI solutions for healthcare by providing greater transparency and control over the model’s architecture, training data, and behavior (Schur et al., 2023).

Despite these promising early results, it is questionable whether USMLE questions are a reliable measure of medical knowledge. While multiple-choice questions are intuitive, they test only specific types of knowledge and do not represent the full range of clinical practice. To truly evaluate LLMs in medicine, more sophisticated, clinical practice-based benchmarks beyond the USMLE are needed. These new benchmarks should not only assess single points of knowledge, but also test the complete knowledge of a model, adhere to clinical guidelines, and capture the complexity and ambiguity of cases that may differ from standardized test questions. They should be more in-depth than a medical licensing exam and closer to board certification exams.

Furthermore, using LLMs solely as a source of knowledge may not be the best application in medicine. Instead, LLMs can be used for a variety of tasks that take advantage of their natural language processing capabilities (Thirunavukarasu et al., Clusman et al.). For example, LLMs can be used to extract information from clinical documents (Adams et al.), automatically identifying and extracting relevant data points from large volumes of unstructured text. This can reduce the manual effort required to process large volumes of clinical notes, discharge summaries, and other medical records, and help healthcare professionals find information faster.

In addition, LLMs can help structure and standardize medical data, such as post hoc structured reporting (Adams et al.) or mapping free-text diagnoses to standardized codes like ICD-10, although the latter still presents a considerable challenge for current models (Soroush et al., Boyle et al.). Despite major standardization efforts, such as the implementation of FIHR (Fast Healthcare Interoperability Resources), hospital systems are still challenged by non-standardized data. LLMs can help with standardization to facilitate interoperability, data analysis and billing processes.

In addition, LLMs can be used not only as knowledge bases, but also as reasoning or inference engines (Truhn et al.), e.g. coupled with a database, such as in a Retrieval-Augmented Generation workflow. In this approach, LLMs can be used to generate natural language queries based on a given clinical context, retrieve relevant information from a medical knowledge base, and then generate coherent and contextually appropriate responses. This combination of LLMs and structured medical knowledge can provide more accurate and reliable clinical decision support.

Although previous work has explored these applications of LLMs in medicine, benchmarks that evaluate LLMs in these scenarios are still sparse (e.g., Xiong et. al, Adams et al.). To fully realize the potential of LLMs in healthcare, it is crucial to develop comprehensive benchmarks that evaluate their performance not only in answering medical questions, but also in tasks such as information extraction or data structuring.

However, the strong performance of Llama3-70B on the USMLE questions and the likely improved performance of Llama-400B, to be released later this year, show that open source is closing the performance gap with proprietary models in healthcare. While performance improvements are still needed, these new open models will help empower researchers, developers, and healthcare professionals to collaborate and innovate, ultimately leading to better patient care and more efficient healthcare systems.